The Curious Case of “Vegetative Electron Microscopy”

AI Inside for Wednesday, April 16, 2025

Hey everyone! We had a VERY fun episode of AI Inside this week. Before we get there:

Big thanks to those who support us directly via Patreon at PATREON.COM/AIINSIDESHOW, like Jesse!

If you enjoyed the Yann LeCun interview, please do us a favor: head over to Apple Podcasts and LEAVE A REVIEW. Mention the interview and what you thought of it. We would love to do more episodes like that and YOUR FEEDBACK is crucial to that pursuit.

OpenAI Eyes Social Media

OpenAI is getting in on the social media game. According to sources to The Verge, they’re working on an early internal prototype of a ChatGPT image product that integrates a social feed. Sam Altman has teased a social option in past tweets, and it’s a great way for OpenAI to gain real-time user generated data that they don’t have to pay others for.

For added context: Meta is reportedly working on bringing a social feed to its AI products, and X already has Grok integrated. They all follow similar paths to the same destination, don’t they? In some ways, this reminds me of Ideogram.

OpenAI Updates: Models, Memory, and More

A small collection of other OpenAI news which we can knock out in one swoop.

Soon, say goodbye to GPT-4.5 as it’s sunsetting in the API to make room for the newest model: GTP-4.1! This new model boasts 750k words of context—more than War and Peace. OpenAI says it “excels” at coding and following instructions, but admits it becomes less reliable the more input tokens it has to manage. Accuracy with 8k tokens is 84%, but with 1 million tokens, it drops to 50%!!

Related to coding, CFO Sarah Friar spoke at a tech summit in London about an “agentic software engineer” or A-SWE. She says it would do all the things software engineers hate to do: its own QA, bug testing, documentation.

OpenAI also announced a big expansion of its memory and customization capabilities. ChatGPT is learning even more about its users over time. Memory isn’t new to ChatGPT, but users often had to ask for it specifically to remember certain things, and it was opt-in. Now you can “reference chat history” at the tap of a button, using all past chat history.

Nvidia’s US Manufacturing Push and Export Pressures

Nvidia is ramping up its US manufacturing footprint—more than 1 million sq ft of space in Arizona and Texas for producing and testing AI chips, including Blackwell processors. Partnerships with TSMC, Foxconn, and more. Nvidia projects this will generate many hundreds of thousands of US jobs and economic activity in the US over the next decade.

Nvidia is also grappling with financial pressures related to US export controls on its advanced AI chips, especially the H20 processor designed for the Chinese market. This results in immediate revenue loss and growing uncertainty about its future growth. Nvidia is under significant threat in the midst of growing US export restrictions and a tech trade war with China, seeing steep declines in its stock price. China accounts for a large portion of its data center revenue.

Bill Gates on AI and the Future of Work

Bill Gates is projecting that AI is coming for a few key professions. Speaking on the People by WTF podcast, he singled out teaching and medicine. He sees AI as plugging labor gaps in those areas:

“AI will come in and provide medical IQ, and there won’t be a shortage.”

For education, where schools are largely understaffed and it’s difficult to hire teachers, some schools are replacing SOME teachers with AI tools like ChatGPT for exam prep.

Gates also said AI is coming for blue collar workers—factory, construction, hotel cleaners—hinting that humanoid robots will eventually take the place of human workers. What does this mean for us measly humans?

“You can retire early, you can work shorter workweeks. It’s going to require almost a philosophical rethink about, ‘OK, how should time be spent?’”

This feels very utopian and out of touch with the perception of much of the public, especially in the here and now.

AI Image Generation Trends: The Barbie Box Challenge

ChatGPT image generation has been the source of many trends and memes in recent weeks. One of those is creating action figures, or “The Barbie Box” challenge—the idea of productizing ourselves. I couldn’t help but do this myself, and of course I had to do one for Jeff too. (see the episode thumbnail)

A big discussion around this trend is, of course, the reaction of human artists and illustrators, who point out that the source of this AI-driven ability is often the use of unlicensed work and the threat to creative livelihood.

Notion Mail: A New AI-Powered Email App

Notion introduced a new Mail app called Notion Mail—the third addition to its productivity suite along with Notion and Notion Calendar. It’s available on web and Mac, with iOS in testing. As Notion does, it emphasizes simplicity over clutter and is driven by AI-powered organization. You can create custom labels automatically, label emails based on content and not just sender or subject, and create custom labels and views (essentially filters). AI-assisted writing tools are included, and it’s currently limited to Gmail accounts.

Sounds a bit like Google Inbox—another Google product ahead of its time?

Google’s DolphinGemma: Decoding Dolphin Communication

Google collaborated with the Wild Dolphin Project and Georgia Tech to develop DolphinGemma, an advanced AI model for decoding and generating dolphin vocalizations. It’s compact and can run on a Pixel. While it doesn’t directly translate dolphin language, it identifies patterns and structures in vocalizations and will be released as an open model.

This could be a big step in understanding dolphin communication. Dolphins have a complexity to their language that parallels human language in some ways—clicks, whistles, burst pulses. Apparently, these combine to signal nuances like identity, relationships, and abstract basic concepts, different from the more limited symbolic reference of communication by animals like dogs, cats, mice, etc.

The Curious Case of “Vegetative Electron Microscopy”

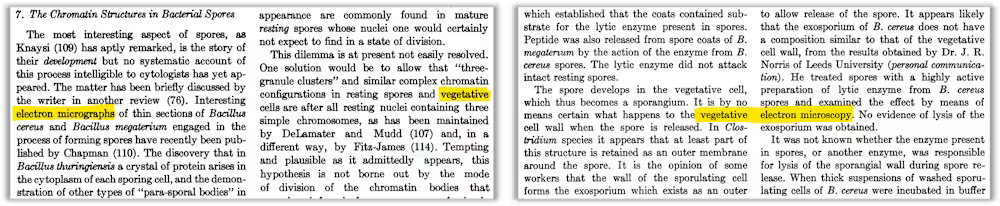

“Vegetative Electron Microscopy”—a phrase that has begun to appear in scientific papers, but doesn’t actually exist to refer to anything. Its ongoing appearance is the result of a series of accidental errors that led to AI-driven reinforcement. The authors of this paper went to great lengths to find the origins of this phrase and you really must take a look.

A digitization mistake in the 50s, when different columns in scanned journals were combined, was later spread around due to translation errors in scientific works. Now, AI models have those references in data sets and the journey continues! You could call it a “digital fossil.” Of course now, if AI feeds on its own entrails, that means that Vegetative Electron Microscopy no longer means absolutely nothing, it actually means… this entire story. (if that makes any sense)

Follow Jeff’s work: jeffjarvis.com

Follow Jason: youtube.com/@JasonHowell

Everything you need to know about this show can be found at our site.

Again, please leave a review in Apple Podcasts if you love what we are doing.

And finally, if you really REALLY love this show, support us on Patreon.

Ad free shows

a Discord community

you can get an AI Inside T-Shirt by becoming an Executive Producer!

Executive producers of this show include: DrDew, Jeffrey Marraccini, WPVM 103.7 in Asheville NC, Dante St James, Bono De Rick, Jason Neiffer, and Jason Brady!!

Thank you! See you next Wednesday on another episode of AI Inside.